- ALL COMPUTER, ELECTRONICS AND MECHANICAL COURSES AVAILABLE…. PROJECT GUIDANCE SINCE 2004. FOR FURTHER DETAILS CALL 9443117328

Projects > ELECTRONICS > 2017 > IEEE > DIGITAL IMAGE PROCESSING

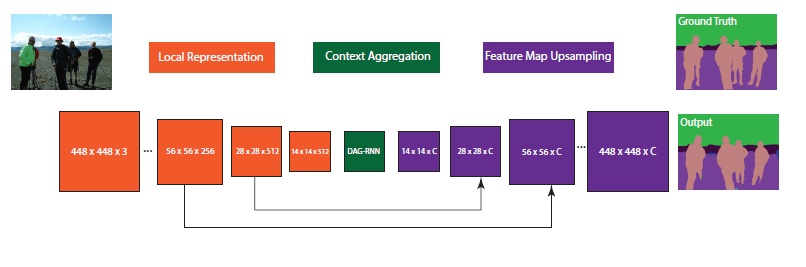

In this paper, we address the challenging task of scene segmentation. In order to capture the rich contextual dependencies over image regions, we propose Directed Acyclic Graph - Recurrent Neural Networks (DAG-RNN) to perform context aggregation over locally connected feature maps. More specifically, DAG-RNN is placed on top of pre-trained CNN (feature extractor) to embed context into local features so that their representative capability can be enhanced. In comparison with plain CNN (as in Fully Convolutional Networks - FCN), DAG-RNN is empirically found to be significantly more effective at aggregating context. Therefore, DAG-RNN demonstrates noticeably performance superiority over FCNs on scene segmentation. Besides, DAG-RNN entails dramatically less parameters as well as demands fewer computation operations, which makes DAG-RNN more favorable to be potentially applied on resource-constrained embedded devices. Meanwhile, the class occurrence frequencies are extremely imbalanced in scene segmentation, so we propose a novel class-weighted loss to train the segmentation network. The loss distributes reasonably higher attention weights to infrequent classes during network training, which is essential to boost their parsing performance. We evaluate our segmentation network on three challenging public scene segmentation benchmarks: Sift Flow, Pascal Context and COCO Stuff. On top of them, we achieve very impressive segmentation performance.

Deep Parsing Network, Deep Structured Model, Dilated Convolutions.

In this paper, we propose DAG-RNN to capture the rich contextual dependencies over image regions. To be specific, we locally connect features (in feature maps) to form a graph (UCG, which refers to the undirected graphs in which there exists a closed walk with no repetition of edges.) Due to the loopy property of UCGs, RNNs are not directly applicable to UCG-structured images. Thus, we decompose the UCG to several directed acyclic graphs (DAGs). In other words, an UCG-structured image is represented by the combination of several DAG-structured images. Then, we develop DAGRNN, a generalization of RNNs, to explicitly pass local context based on the directed graphical structure. By doing this, contexts are explicitly propagated and encoded into feature maps.

SEGMENTATION NETWORK