- ALL COMPUTER, ELECTRONICS AND MECHANICAL COURSES AVAILABLE…. PROJECT GUIDANCE SINCE 2004. FOR FURTHER DETAILS CALL 9443117328

Projects > ELECTRONICS > 2020 > IEEE > DIGITAL IMAGE PROCESSING

Learning based depth estimation from light field has made significant progresses in recent years. However, most existing approaches are under the supervised framework, which requires vast quantities of ground-truth depth data for training. Furthermore, accurate depth maps of light field are hardly available except for a few synthetic datasets. In this paper, we exploit the multi-orientation epipolar geometry of light field and propose an unsupervised monocular depth estimation network. It predicts depth from the central view of light field without any ground-truth information. Inspired by the inherent depth cues and geometry constraints of light field, we then introduce three novel unsupervised loss functions: photometric loss, defocus loss and symmetry loss. We have evaluated our method on a public 4D light field synthetic dataset. As the first unsupervised method published in the 4D Light Field Benchmark website, our method can achieve satisfactory performance in most error metrics. Comparison experiments with two state-of-the-art unsupervised methods demonstrate the superiority of our method. We also prove the effectiveness and generality of our method on realworld light-field images.

convolutional neural networks (CNN)

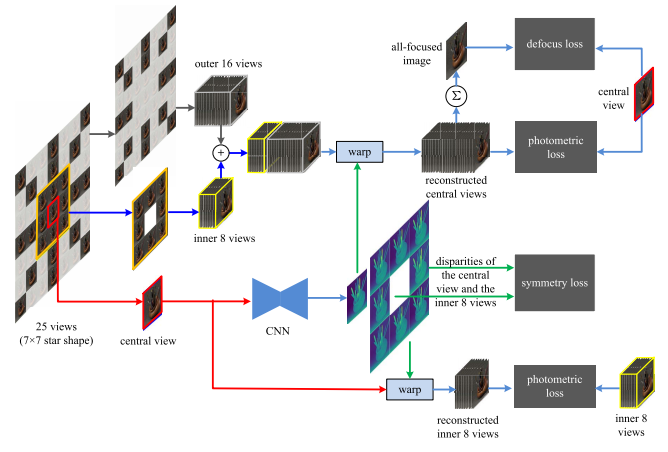

We design an end-to-end unsupervised monocular depth estimation architecture based on the characteristics of the light field geometry. It takes the central view of light field (i.e. the central sub-aperture image) as input, and predicts the disparities of the central view and its 8-neighborhood views. In order to avoid the vanishing gradient problem, the subaperture images in four angular directions (horizontal, vertical, left and right diagonal) are selected to participate in the unsupervised loss computation. We propose a defocus loss to replace the traditional disparity smooth loss. Refocusing, as one of the most distinctive light field techniques, implies an important defocus cue associated with depth information. We recover an all-focused image, and introduce a simple idea to compute the defocus loss according to the characteristics of defocus blur. The re-sampling and summation in the all-focusing process make the defocus loss not only provide global constraints but also possess some denoising and smoothing abilities.

BLOCK DIAGRAM