- ALL COMPUTER, ELECTRONICS AND MECHANICAL COURSES AVAILABLE…. PROJECT GUIDANCE SINCE 2004. FOR FURTHER DETAILS CALL 9443117328

Projects > ELECTRONICS > 2020 > IEEE > DIGITAL IMAGE PROCESSING

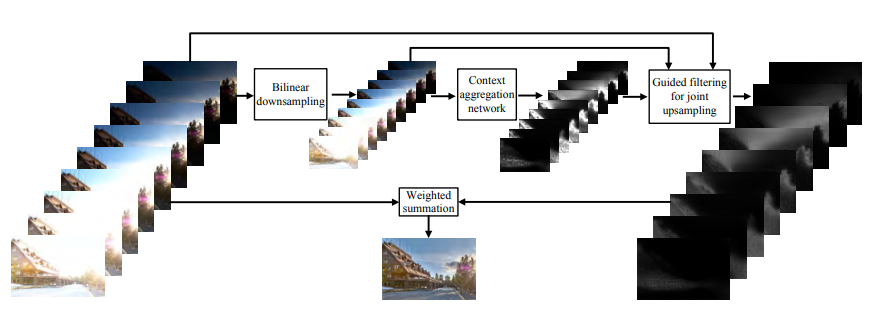

We propose a fast multi-exposure image fusion (MEF) method, namely MEF-Net, for static image sequences of arbitrary spatial resolution and exposure number. We feed a lowresolution version of the input sequence to a fully convolutional network for weight map prediction. We then jointly upsample the weight maps using a guided filter. The final image is computed by a weighted fusion. Unlike conventional MEF methods, MEF-Net is trained end-to-end by optimizing the perceptually motivated MEF structural similarity (MEF-SSIM) index over a database of training sequences at full resolution. Across an independent set of test sequences, we find that the optimized MEF-Net achieves consistent improvement in visual quality for most fused images and runs 10 to 1000 times faster than stateof-the-art methods.

Pixel-wise MEF methods

The proposed work develops to achieve flexibility, we utilize a fully convolutional network, which takes an input of arbitrary size and produces an output of the corresponding size (known as dense prediction). The network is shared by different exposed images, enabling it to process an arbitrary number of exposures. To achieve speed, we follow the down sample-execute-up sample scheme and feed the network a low-resolution version of the input sequence. Rather than producing the fused image, the network learns to generate the low resolution weight maps and jointly up sample them using a guided filter for final weighted fusion. By doing so, we take advantage of the smooth nature of the weight maps and make use of the input sequence as the guidance. Directly up sampling the fused image is difficult due to the existence of rich high-frequency information in the high-resolution sequence and the lack of proper guidance. To achieve quality, we integrate the differentiable guided filter with the preceding network and optimize the entire model end-to-end for the subject-calibrated MEF structural similarity (MEF-SSIM) index over a large number of training sequences. Although most of our inference and learning is performed at low resolution, the objective function MEF-SSIM is measured at full resolution, which encourages the guided filter to cooperate with the convolutional network, generating high quality fused images.

BLOCK DIAGRAM