- ALL COMPUTER, ELECTRONICS AND MECHANICAL COURSES AVAILABLE…. PROJECT GUIDANCE SINCE 2004. FOR FURTHER DETAILS CALL 9443117328

Projects > ELECTRONICS > 2017 > IEEE > DIGITAL IMAGE PROCESSING

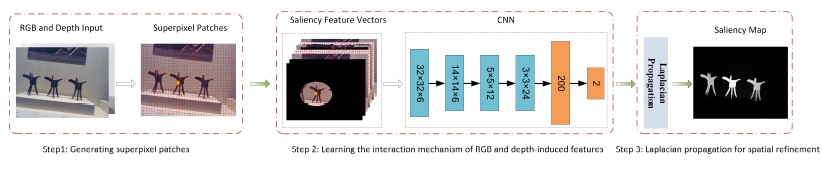

Numerous efforts have been made to design various low-level saliency cues for RGBD saliency detection, such as color and depth contrast features as well as background and color compactness priors. However, how these low-level saliency cues interact with each other and how they can be effectively incorporated to generate a master saliency map remain challenging problems. In this paper, we design a new convolutional neural network (CNN) to automatically learn the interaction mechanism for RGBD salient object detection. In contrast to existing works, in which raw image pixels are fed directly to the CNN, the proposed method takes advantage of the knowledge obtained in traditional saliency detection by adopting various flexible and interpretable saliency feature vectors as inputs. This guides the CNN to learn a combination of existing features to predict saliency more effectively, which presents a less complex problem than operating on the pixels directly. We then integrate a superpixel-based Laplacian propagation framework with the trained CNN to extract a spatially consistent saliency map by exploiting the intrinsic structure of the input image. Extensive quantitative and qualitative experimental evaluations on three datasets demonstrate that the proposed method consistently outperforms state-of-the-art methods.

Deep Neural Network Model.

In this paper, we propose a deep fusion framework to automatically learn the interaction mechanism between RGB and depth-induced saliency features for RGBD saliency detection. The proposed method takes advantage of the representation learning power of CNNs to extract hyper-features by fusing different hand-designed saliency features for the detection of salient objects. We first compute several feature vectors from the original RGBD image, which include local and global contrasts, a background prior, and a color compactness prior. We then propose a CNN architecture to incorporate these regional feature vectors into more representative and unified features. Compared with the raw image pixels, these extracted saliency features are well designed and can more effectively guide the training of the CNN toward saliency optimization. Because the resulting saliency map may suffer from local inconsistencies and noisy false positives, we further integrate a superpixel-based Laplacian propagation framework with the proposed CNN. This approach propagates high-confidence saliency to other regions by considering color and depth consistency and the intrinsic structure of the input image; thus, it is possible to remove noisy values and produce a smooth saliency map. The Laplacian propagation problem is solved with rapid convergence by means of the conjugate gradient method with a preconditioner.

BLOCK DIAGRAM